In 2015, Issues in Law & Medicine published “Epidemiologic and Molecular Relationship Between Vaccine Manufacture and Autism Spectrum Disorder Prevalence” by Theresa A. Deisher, Ngoc V. Doan, Kumiko Koyama, and Sarah Bwabye (Spring;30[1]:47-70. PMID: 26103708) (PDF). Dr. Theresa Deisher is the only one with a PhD, while all three others only have bachelor’s degrees; thus, the paper primarily falls on Dr. Deisher, and will be referred to as Deisher et al. for the rest of this post. This article has long had significant issues pointed out in various sources. My own investigation into the numbers shows possible data fraud or that the very least significant issues in data handling that are worthy of retraction. This will be a very long post, so I will give the three main parts and a summary before going into each part.

Table of Contents

- First, I will cover the existing literature on Deisher, which is largely not in the academic sphere.

- Second, I will cover the possible data fraud, or gross mismanagement of data if there was no fraud.

- Third, I will deal with the need to retract this and possible further actions.

Summary

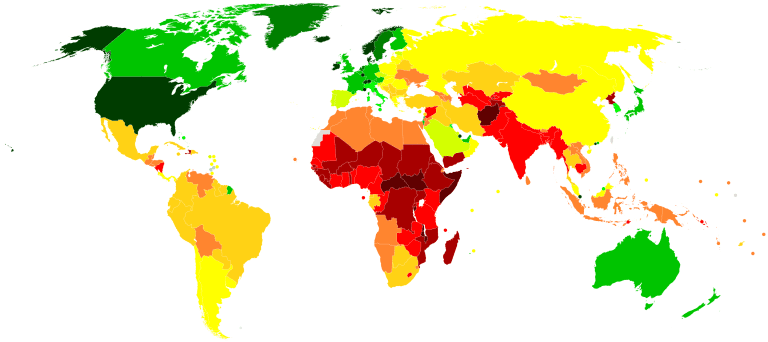

Deisher et al. makes the claim that rubella vaccines grown on fetal cell lines are causal in increased autism prevalence. For this end, two primary arguments are used. First, the paper takes the autism & MMR vaccination rates of three European countries and compares them for possible correlation, which it then refers to as causation, despite only being correlation. Second, the researchers found short strands of human DNA in the MMR vaccine. This seems insufficient evidence on its own for the claim that these vaccines cause autism. However, this is further exacerbated by possible data fraud or, at the very least, significant mishandling of the data in the first argument. She claims certain sources give certain numbers, but comparing numbers between those sources and this article, they do not line up. The paper also has other methodological issues already mentioned in the existing literature. The existing literature section also shows how this paper is still being shared, and Deisher is presented as a vaccine expert. After this all, there is a discussion of the importance of retracting papers like this to maintain scientific and academic integrity. It will recommend a retraction, a removal of her as an expert on vaccines, and an investigation into her.

Notes:

- I will provide links to sources in the text as a form of citation appropriate to this medium, but key more-academic sources are also listed in the Bibliography at the end.

- This may be a little dense and academic for some readers at times. I apologize. I tried to submit a version of this for academic publication, but after two attempts (just part 2 to issues in Law & Medicine, then parts 2 & 3 to another journal), I thought posting it on my blog might be best.

Part 1: Existing Literature Calling Desher into Question

Before diving into part 2 on data that is either fraud or mishandled, which is what this article has to add to the discussion, I want to compile and address other literature on this paper and a bit on Theresa Deisher as well. The first three subsections deal with the spread of her article and her claims: first, herself in popular media, then her current research, then the article specifically. Next, I will deal with a 2019 open letter she published that repeated the claims of this article that got attention. Finally, we will look at existing critiques: this will focus on the comments on this article in PubPeer, and the most extensive writing on Deisher’s article by Dr. Gorski.

Deisher Is Popular Among Certain Populations

An initial question some may have with me writing a long piece on an 8-year-old paper in a low-impact journal is, “Why does it matter?” It matters because, unfortunately, she is still popular in Catholic anti-vax circles, and this article is still being spread online. I will deal with her recent media appearances here and the spread of this specific article below.

Deisher is often presented in Catholic media as some kind of expert on the issue, yet as the evidence below shows, this is likely not accurate. I only searched since the beginning of 2020. I found that she had appeared on numerous well-known media sites that are Catholic or Catholic-adjacent. Pieces present her as an expert opposing Covid vaccines: LifeSiteNews “likely not safe and not necessary” (highly improbable by publication); LifeSiteNews again “Dr. Theresa Deisher warned about the dangers of mRNA permanently re-writing our genetic code by making changes to our DNA” (patently false); Patrick Coffin’s Podcast (1, 2, 3) “This virus today has less than a 0.03% fatality rate,” and “15% of the very healthy young volunteers [experiencing] significant side effects” (both demonstrably false); and Inside the Vatican “They will pose the same risks that the other aborted fetal DNA-containing vaccines do,” (neither of the two main US covid vaccines have any DNA at all so this seems like a stretch). Pieces from Inside the Vatican (1, 2), Catholic Family News, a YouTube channel interviewing her, Crisis Magazine, and the National Catholic Register directly repeat her claims about fetal DNA in vaccines and the damage this supposedly causes, which is directly related to this paper. Her article has also been presented to me multiple times when defending good theology and science against Catholic or Catholic-adjacent anti-vaxxers. This is not super wide, but unfortunately, far too many Catholics get confused by her writings and presentations.

Deisher’s Current Research

Deisher has also continued to work in medical research, including with grants and approved human trials. If she presented fraud in an academic publication or if she was so bad at her data handling (the other option), I would think that both would disqualify her from being a lead researcher on such things. There are various sources for the work of AVN Biotech, where Deisher is the president and chief researcher, but a 2023 press release summarizes it. It notes “AVM Biotechnology has previously been awarded five (5) highly competitive National Institute of Health SBIR grants; three (3) of these from the National Cancer Institute.” The same press release also announces a phase II trial for an immunosuppressant drug she and her company are working on.

The Spread of this Article

We cannot take this article lightly. Although only cited thrice in the academic literature, Deisher et al. has an Altmetric score of 1393 and is “In the top 5% of all research outputs scored by Altmetric,” and 99th percentile for research of the same age, primarily due to non-academic citations. This will cover the academic and then the non-academic citations included in this score, dividing the non-academic citations into social media and news.

Even the three citations are rather minor. It is cited in a book chapter on vaccine hesitancy, as one of four sources for a general statement about autism being multi-factor in causation and as one of several articles cited in an Italian court case in an article on Italian vaccine law.

Instead, most of the Altmetric score comes from being cited by 2952 X users (including myself in earlier less extensive critiques) and 79 Facebook pages. A Rand Study, “Dissemination of Vaccine Misinformation on Twitter and Its Countermeasures,” even cites a tweet alluding to this study as an example of misinformation.

Altmetric refers to three news sources citing this paper. One is currently offline, but the other two seem to be a repost of a Mercola article behind a paywall. The main gist of the article is opposition to the idea of eating bugs. This Deisher et al. paper is cited for a line in the section on cannibalism; “Independent research has found that vaccines manufactured in human fetal cell lines contain ‘unacceptably high levels of fetal DNA fragment contaminants,’” Which is a quote from a blog that cites this article. None of these five interconnected sources are particularly reliable. Three don’t appear anywhere, Mercola is low for reliability in the AdFontes chart and low on the factuality on Media Bias / Fact Check with a “Quackery” rating on the pseudoscience bar, and Global research also gets a low rating for facts on Media Bias / Fact Check: it moves up to the second lowest rating for pseudoscience but gets the “Tin Foil Hat” conspiracy rating. Even with such low ratings for factuality and science, this information is still being spread widely on such sites.

Deisher’s Open Letter

Deisher has also cited her article in an open letter to legislators. Health Feedback took up this letter and her article, giving it a low scientific credibility rating. The letter and Health Feedback focus more on the second claim of the paper that human DNA strands were found in MMR vaccines, which the various experts interviewed critiqued. Teja Celhar notes the study in question has “serious methodological problems.” The summary then notes that Deisher overstated her other claims: “TLR9 [a receptor she claimed would cause autism from such human DNA] senses only unmethylated DNA (like that of bacteria and viruses), whereas human DNA would be methylated. This means that even if fetal DNA was present – which was not even proven by Deisher et al. to begin with – it would not be able to activate TLR9 anyway.” There are other critiques of this letter, but they are more-or-less repetitions of these points.

Critiques on PubPeer

On PubPeer, a site for academics to comment on articles, Deisher’s article drew two long comments. Let’s examine each.

The first comment questions the whole premise.

One would expect that after the Wakefield debate editors would be doubly cautious before publishing something that hypothesises a link between vaccines and autism. Unfortunately, this article shows it is not the case. The argument is woven out of strenuous “just so” links, without adequate justification for any of the many steps required to make the purported connection; how those tiny base sequences were established to be of human origin? how to make sure that those were not simply integrated into the viral genome? how does such a small sequence of pairs would interfere with a host? has it really been demonstrated that such small sequences can somehow enter host cells?

The issue of multiple implied links that evidence is claimed for but not really provided is a serious methodological issue, even if all the data is solid. However, he also questions the data and possible issues of data selection.

But even if we take those at face value, the fundamental premise of the whole argument, the supposedly “epidemiologic” evidence, is anything but. The “evidence” is a graph that plots the average incidence of autism in three countries and the average MMR coverage in those countries, with absolutely no attempt at any statistical correlation. Once again, even if we take such data for its face value, it is mind boggling that anyone would attempt to call this “evidence”. Why those three countries were selected? Why was the US, where this problem is even more serious, left out? Why not test for correlation within each country? Why not test for correlation at all? Given the problems we already have with vaccine coverage in many places of the world, it is utterly irresponsible to publish something that weak and claim it to be evidence of anything.

Below, I will go further and show that not only is this an issue of selecting countries somewhat randomly, but there are also further issues with the data.

The second comment is a more technical comment. It first notes the claim that the DNA in a vaccine being incorporated into human DNA is extremely unlikely but asks about what kind of inflammation might be caused by such DNA: “From an immunology and inflammology standpoint, the concern is the likelihood that exogenous human DNA would trigger inflammatory responses after being perceived by the immune system and received by receptors for DAMPs (damage associated molecular patterns).” He goes into detail talking about factors that might cause inflammation but any inflammation of this level in an intermuscular vaccine administered to the arm or buttocks is unlikely to affect the brain when autism is a brain condition.

David Gorski Gives the Most Complete Rebuttal to Date

David Gorski, AKA Orac, gives a relatively complete rebuttal of this Deisher article on his website. He brings out most of the errors that exist even assuming her raw data is right. He has three main points worth repeating: he notes that Deisher’s theory is extremely improbable with radically insufficient evidence, that she presents a graph in a misleading way (we’ll go over this again in part 2), and issues with the second argument form this paper using a cell experiment.

Improbable

Gorski begins by noting the various steps needed for this implausible human DNA in vaccines to autism hypothesis to work. This “human DNA from vaccines would have to:

- “Find its way to the brain in significant quantities.

- “Make it into the neurons in the brain in significant quantities.

- “Make it into the nucleus of the neurons in significant quantities.

- “Undergo homologous recombination at a detectable level, resulting in either the alteration of a cell surface protein or the expression of a foreign cell surface protein that the immune system can recognize.

- “Undergo homologous recombination in many neurons in such a way that results in the neurons having cell surface protein(s) altered sufficiently to be recognized as foreign.

- “That’s leaving aside the issue of whether autoimmunity in the brain or chronic brain inflammation is even a cause of autism, which is by no means settled by any stretch of the imagination. In fact, quite the opposite. It’s not at all clear whether the markers of inflammation sometimes reported in the brains of autistic children are a cause, a consequence, or merely an epiphenomenon of autism.”

This is a more extensive list of the issues mentioned in the PubPeer comments above. Deisher et al. presents an extremely implausible conclusion without presenting sufficient evidence, even assuming all evidence is with error, for that conclusion.

Gorski also deals with issues in two prior papers by Deisher in 2010 and 2014 throughout this piece. I will not include them here, but you can read him, Simcha Fisher, Rational Catholic, or others critique those. In this piece, I want to focus on her 2015 paper.

Deisher’s Bad Graph

Gorski spends a significant portion of his piece critiquing the graph in Figure 1 of Deisher et al. 2015.

The first issue he notes with the graph is that in Table 2, the paper combines MMR coverage data from three countries without defining coverage as one or two MMR shots, without a country-by-country breakdown, without a good explanation as to why these countries were chosen over others, or without indicating if it is a weighted or unweighted mean. He suspects it is an unweighted mean as Deisher et al. use an unweighted mean when it shows the three countries’ autism prevalence. He notes that this methodology “is a great way to produce the ecological fallacy in a big way.”

Gorski then shows Deisher’s graph as it exists in the paper compared to a graph that is properly normalized on the y-axis as the MMR coverage part of the graph reaches its bottom level in the graph at 86%. (Matt Carey made the second graph off Deisher’s data.) Compare figures 1 & 2.

He states: “If we were to take these graphs at face value, a 10% drop in MMR uptake resulted in a 60% drop in autism prevalence.” That seems like an odd thing. He concludes, “If this model held (at least in a linear fashion), there’d have been no autism if MMR uptake fell below 75%,” which seems rather odd.

He also notes how the autism rate data keeps switching which of the three countries or which combination it uses, which is not an honest way of using such data.

Given all the issues, Gorski concludes this section: “The whole thing is a mess so ridiculous that it can only be due to gross incompetence on an epic scale or deliberate deception coupled with utter contempt for the antivaccine audience who is expected to eat this crappy science up. Take your pick.” This is only on the graph. He is assuming all the data is correct as he evidently did not look further into that, but part two will bring questions into even that data in the paper.

Cell Culture

This is where I am not as trained in the details, so I cannot offer critiques as specific as in the prior argument, where it is basic numbers that are the error. Gorski notes that the experiment in this paper put in about 14-21 times as much double-stranded DNA as is in a vaccine and used a relatively small number of cells. Then after 24-48 hours of shaking, there was some uptake of that DNA. Gorski summarizes it: “She ramped up the concentration of DNA per number of cells to a level that has no direct relevance to physiology, marveled when she found that these cells, when flooded with DNA, actually do take up some of it, and is now claiming that her experiment indicates a grave problem that desperately needs to be studied.”

Dr. David Gosrski’s critique of Deisher seems relatively complete, showing that her paper is of little value due to methodological flaws. The main flaws are a highly improbable theory of mechanism with almost no data supporting it, a graph that is misleading, and issues with the methodology of a cell culture experiment.

Note: Gorski seems not to be a fan of Catholicism in some comments. I think Deisher’s goal of trying to make a rubella vaccine without fetal cell lines is good, but her means are extremely problematic with bad pseudoscience, destroying whatever goodwill I have towards her goal.

Conclusion to Literature

Even from the existing literature, it seems fairly clear that Dr. Theresa Deisher is not an expert anyone should put their trust in regarding vaccines. Her paper has significant methodological flaws that make it unreliable. However, her paper is being widely shared online; she is continuing to do research with grants and human trials; and many Catholic or semi-Catholic publications are quoting her as a vaccine expert.

Part 2: Fraud or Significant Data Mishandling in Deisher et al. 2015

After reviewing the existing literature on Deisher et al. above, we now delve into issues with data that seem either caused by fraud or by significant data mismanagement and misleading presentation. Either of these is a serious issue for peer-reviewed science.

The focus of this critique is on Figure 1 and the data to get to it in Tables 1 and 2 (63-64). Tables 1 and 2 claim to get data from external sources, then Figure 1 claims to use the data from these tables to make a graph. However, there is a substantial difference between the published sources the article claims to rely on and the data in this article, plus the data is used in a way that does not seem to follow proper principles.

Even if the information and data were all true, at most, it proves a correlation between autism & MMR coverage rates, but the article mainly refers to it as causal, not simply correlation: it refers to this correlation as potentially or apparently causal five times (pages 48, 56, 57, 57, 59) while mentioning this may just be correlated and not causal only once (page 57).

This critique will talk about the three elements of Deisher et al.’s data: table 1 by nation, table 2 briefly, and then the graph in Figure 1. Over half of it will be on Table 1, going through with a fine tooth comb. The critique of Figure 1 relies partially on the Gorski article cited above in the literature review.

Autism Rates by Nation (Table 1 in Deisher et al.)

For autism rates by nation, Deisher et al. list Norway then the UK, then Sweden: this section will cover them in the same order. They do not claim to have gotten this data as an independent study but instead rely on prior studies looking into this. However, sometimes they leave out key details about the data, sometimes their data is not the same as the data presented in those studies, and sometimes the studies don’t even give the data they claim to obtain from them. There are no logical explanations for this except fraud or some gross mishandling of data.

Here is Table 1 from Deisher et al. (as Figure 3) so we can know what is being critiqued and what sources are claimed for the data.

Norway

Norway is the first country listed in Table 1 of Deisher (figure 3). Three sources are cited for the data, but the data is only taken from one of the three, and even then, it is not the proper data it claims to be. Each of the three individually gives similar data, which contradicts the data given by Deisher et al.

- Isaken et al. 2012 has no breakdown by birth year, which is what the chart in Deisher et al. is showing, and it is limited to certain parts of Norway: “This study is based on 31,015 children born between 1 January 1996 and 31 December 2002 and who reside in the counties of Oppland and Hedmark.” (593) A summary of the findings: “This gives a prevalence of 51 per 10,000 (95% CI, 0.43-0.59).” Despite this prevalence of 0.51% in this study, all of the numbers used by Deisher et al. by birth year are in the 0.05% to 0.26% range for these dates.

- Surén et al. 2012 has data by birth year but it varies wildly from Deisher et al. (The exact number Deisher et al. states is only in a graph in Surén et al. 2012, but it is simply a percentage found by taking all autistic children as the numerator and all children as the denominator both of which are listed by sex in table 1 [e154] of Surén et al. 2012.) Table 1 has a column below, which is simple math based on the columns of children and autistic children in Table 1 of Surén et al. 2012 (figure 4 below). (Rate of autism = (boys & girls with ASD)/(total boys & girls))

- Surén et al. 2013 divides respondents by sub-type of autism spectrum disorder (ASD) in Table 1 (663) (replicated as Figure 5 below). The total column makes the most sense for comparison with other numbers in the previous two, and in the UK & Sweden seem to all use total with ASD, and that is what Deisher metal. appear to claim they are listing in Table 1. However, Deisher et al. takes the column of PDD-NOS (Pervasive Developmental Disorder, Not Otherwise Specified – a prior subtype now part of ASD) for 1999, then the column of autism disorder (a sub-type of autism spectrum disorder) for all subsequent years (this study combines 1999 & 2000 birth years, but Deisher et al. get different data). Also, the numbers for 1999-2000 and 2001 are extremely small, so randomness takes on a greater factor.

Surén et al. 2012 and Surén et al. 2013 can be compared to Deisher et al. by year. The first column (green) shows Deisher et al.’s data that they claim they got from these sources. The next two columns (red) show the corresponding data these two studies actually have for these years. The two columns of Surén et al. 2012 and Surén et al. 2013 are always substantially larger than Deisher et al., ranging from 1.7 to 11 times larger, while the two Surén et al. studies are always closer to each other than they are to Deisher et al. (the larger number is from 1.02 to 1.4 larger than the smaller number for the same year). Finally, the last three columns show the breakdown by subtype of autism spectrum disorder in Surén 2013. The blue shows the data Deisher et al. actually took.

| Deisher 2015 | Surén 2012 | Surén 2013 | ||||

| year | percent autistic | percent autistic | total (%) | Autism (%) | Asperger (%) | PDD-NOS (%) |

| 1999 | 0.14 | 0.702 | 0.55 | 0.05 (taken for 2000) | 0.37 | 0.14 (taken for 1999) |

| 2000 | 0.05 | 0.526 | ||||

| 2001 | 0.17 | 0.539 | 0.53 | 0.17 | 0.14 | 0.22 |

| 2002 | 0.26 | 0.514 | 0.73 | 0.26 | 0.23 | 0.24 |

| 2003 | 0.29 | 0.493 | 0.67 | 0.29 | 0.18 | 0.22 |

Deisher et al. claim: “the average autism spectrum disorder prevalence in the United Kingdom, Norway and Sweden dropped substantially after the birth year 1998 and gradually increased again after the birth year 2000” (48), but the authors did not take the total data, only subtypes, and the total data does not show this pattern for Norway. Also, if the PDD-NOS number is removed, this data shows a relatively constant growth in the rate of autism disorder from before the reduction in MMR vaccination until after its return.

United Kingdom

Moving on to the UK, the numbers from Deisher et al. again have difficulty lining up with the data from the studies cited as data sources. This time, two sources are cited.

- Latif and Williams 2007 is by date of diagnosis, not by birth year, while the table in Deisher et al. is listed by birth year, so they would not line up in a coherent manner without age of diagnosis also being included as a diagnosis is not always at the same age. Also, Latif and Williams do not show a raw data table of diagnoses by year, but figure 1 (482) in Latif and Williams is a graph of such data (replicated as Figure 6 here), which could be reversed to get a table, albeit with less accuracy than would be preferable. Over time, the graph shows a generally upward trajectory of total cases. Deisher et al. seem to ignore the earlier data in favor of a period where what this study calls “Kanner” autism is being replaced by “Asperger” and “other” types of autism among diagnoses (a significant drop in “Kanner” while the overall numbers are generally stable). Latif and Wiliams offer a table of cases in 1994 to 2003 (combined, not by year) with a column for cases per 10,000. Converted to percentages (what Deisher et al. use): Kanner autism 0.17%, other 0.13%, Asperger’s 0.354%, and total ASD 0.612%. The average of the data given by Deisher et al. for the six years in this period is 0.169%, which could match “Kanner” but does not match any of the other data. This is problematic, as noted above, regarding Surén et al. 2013: Deisher et al. extrapolates this to all autism spectrum disorders. This is more problematic here than with Surén et al. 2013 as “Kanner” may be an even more restrictive set of criteria than when compared to Surén et al. 2013. Also, Latif and Williams do a total over several years, while Deisher et al. claim an annual rate not found in Latif and Williams.

- Lingam et al. 2003 focuses on earlier years, mainly prior to what Deisher et al. are reporting in their study, or years where the study admits the children are so young that most would yet to be diagnosed with ASD. Lingam et al. limits itself to those born in 1998 or before. It also notes, “there was limited data for birth years 1997–99 and extrapolation using polynomials is not usually accurate outside the range of the data.” (669) This means there is limited data for the majority of the range that Deisher et al. gives, only having 1995 and 1996 as relevant years. Even these years rely on a degree of extrapolation or estimates for how many more will be diagnosed as they get older. Finally, this study only gives a graph, not a chart and so numbers need to be taken from a graph and thus +/- 0.01%. The results are in Table 2. The numbers in Deisher et al. are off by almost a factor of two and are substantially lower compared with both UK studies that Deisher et al. claim as their source (as noted above, Latif and Williams 2007 have a total ASD prevalence of 0.612% compared to 0.082-0.274% in Deisher et al.). The numbers Deisher et al. list for these subsequent years (1999-2001) are exceptionally low compared to other available data points, and given how close they are to the study date and the fact autism is often diagnosed a little later in life, they do not seem accurate and show artificially low numbers. Figure 7 and Table 2 display the data form this study and show it does not line up with Deisher et al.

| Years | Deisher 2015 | Lingam 2003 | |

| % autistic | observed cases % | expected cases % | |

| 1995 | 0.274 | 0.46 | 0.52 |

| 1996 | 0.219 | 0.37 | 0.45 |

| 1997 | 0.13 | No line | |

| 1998 | 0.265 | 0 | No line |

| 1999 | 0.11 | ||

| 2000 | 0.061 | ||

| 2001 | 0.082 | ||

Neither source lists any data for 1999-2001 birth years (or 1998-2001 if you count the zero in 1998 as no data, or 1997-2001 if you realize the likely underdiagnosis in observed cases due to their age at the time of the study). Thus, although Deisher et al. claim these two studies as the source for this data, half of the data is not provided in either source.

Sweden

Sweden is the final nation for Table 1. Only one study is listed here. Nygren et al. 2012 lists 2000, 2005 and 2010 as the dates ,but the study is examining 2-year-olds born in 1997-1998, 2003-2004, and 2007-2008. Deisher et al. simplify this to 1998, 2003, and 2007, which oddly compresses the differences in years between the 2nd and 3rd sampling: what is listed as 2007 should be 2008 if it was fair with the prior two samplings of taking the second of two possible years. The last data point of 2007/2008 is particularly important for the thesis in Deisher et al. of a rise in autism at that time as this is the only data beyond 2003 that they cite for autism rates and makes a substantial part of the curve in her graph. Yet, Nygren et al. itself notes that 2010 was based on screening the whole population while 2000 and 2005 were based only on those referred to a center for diagnosis at or before age 2, which methodologically would get substantially lower numbers in the earlier two data points. No post-age-2 diagnoses are contained in this study, yet later diagnosis or non-diagnosis is common in practice when screening is not done. Thus, taking the raw data here without accounting for the change in methodology is not an honest approach to the data. Ockham’s razor would apply a substantial portion of the difference, likely the majority, to the significantly different sampling methodology. In fact, a main point of the study was to compare screening all 2-year-olds for autism to times when no screening was done. The abstract of the study concludes, “Results suggest that early screening contributes to a large increase in diagnosed ASD cases.” (1491) So, this difference in methodology was central to the study, not some footnote accidentally missed. Even the table they got this data from (presented as Figure 8) gives such an indication.

Even with this, Deisher et al. still seem a little off the data as seen in Table 3 here.

Table 3: Autism prevalence in Sweden: Deisher 2015 vs Nygren 2012

| year | Deisher 2015 | Nygren 2012 |

| % autistic | % autistic | |

| 1998 | 0.12 | 0.18 |

| 2003 | 0.038 | 0.04 |

| 2007 | 0.52 | |

| 2008 | 0.64 or 0.80 (denominator: all 2-year-olds or those screened) |

In conclusion, there is not one of the three nations studied for autism prevalence where the autism prevalence listed in Deisher et al. matched the prevalence listed in the studies cited as sources. The closest was Surén et al. 2013 for Norway, but even there it is a subtype of autism and Deisher et al. switched subtypes for one year that changes the graph from constantly growing to a U-shape. Deisher et al. repeatedly show numbers off from the sources they claim by a factor of two or more. They also miss huge methodological issues in the studies limiting their usefulness for showing the same over time, such as not noting that a study only included those diagnosed with autism by a certain date so younger cohorts at that date would naturally be lower or that one study was explicitly comparing screening 2-year olds to numbers of 2-year-olds diagnosed with autism in prior years when screening was not done, when without screening obviously many are not diagnosed at age 2. (I was not diagnosed until my 30s, for example, and I know many others diagnosed at 3, 4, 7 etc., who likely would have been diagnosed at two if screened.) Thus, this whole data set in Deisher et al. is useless. It is from either serious mishandling or intentional fraud.

MMR Coverage (table 2 in Deisher et al.)

Moving on to Table 2, the critique is similar to what Gorski offered above. Deisher et al. provides inadequate documentation to examine her data. The MMR rate is only provided for the three countries combined, while the sources are different for each country, plus no specific source is cited, citing only “The Norwegian Institute of Public Health,” “The National Board of Health and Welfare” and “The Public Health England” without any specific document or website or an indication the authors made a public records request that was filled out for her study. If they obtained the data by request, showing each country as they did for autism rates would have been helpful so one could see if autism prevalence mirrored MMR coverage on a nation-by-nation basis or was just a statistical anomaly where autism rates in one country changed as vaccination rates in another did (even if Table 1 is accurate which seems unlikely given what was stated above). The authors also fail to distinguish what they mean by “MMR Coverage”: does this mean one vaccine or the standard two-dose vaccine regimen, and at what ages? They also say nothing about how they combined the three countries’ data: is it a pure arithmetic mean, weighted by population, or other?

Graph Comparing Autism Rate and MMR Coverage (Figure 1 in Deisher et al.)

Moving on to Figure 1 in Deisher et al. (63), four obvious issues arise, three of which Gorski already raised. First, how do you weigh the various countries, and why are they not dealt with separately? Why are these specific countries chosen and this specific date range? Is this seeking out data to match a preconceived narrative rather than examining all data? Nothing about this methodology was explained. This is particularly problematic when the goal of this graph is to show a pattern over time in the graph, yet the balance between the three countries is switching every year, making such an implication hard to get from that even if the issues with the data, mentioned above, did not exist. David Gorski notes this methodology “is a great way to produce the ecological fallacy in a big way.”

Second, the non-zeroing of one of the Y-axes is deceptive, and if both are zeroed, the possibility of causation, not just correlation is reduced to near zero. According to Deisher’s numbers, the lowest rate for MMR vaccination is 87.2% and the highest is 94.3%, for a difference of 1.08 times the smaller number. According to her numbers, the lowest rate for autism is 0.05% and the highest is 0.52%, for 10.4 times the smaller number. If MMR caused autism, the logical conclusion of those different factors of change would be it was responsible for over 10,000% of autism diagnoses (claimed effect has over 100 times the proportional magnitude of claimed cause [9.4 vs. 0.08: difference from 1 in each case]), which is logically incoherent. Gorski (thanking Matt Carey but seemingly removed from his site) provides a version of the graph with both datasets normalized with a zero on the y-axis: it was presented above in Figure 2. This shows why claiming the red line causes the blue line does not seem credible. (I will repeat figures 1 & 2 from above, showing this difference for ease of reading.)

Third, Deisher et al. seem to take an arithmetic mean of data from these various countries, not noting the different populations of the countries or regions studied. This can be seen for most years in Table 4: the years this is not close will be covered in the next point. This is far easier to do but is not that accurate for the whole as the populations of these countries vary substantially. For example, in 2001, the UK had about 13 times the population of Norway, yet Deisher et al. took the mean, treating these as if they had equal populations. This creates significant differences in autism rates (greater than 0.03%) in 2001 and 2003. Oddly, for 1998 they instead seem to simply take the UK data rather than do either mean with the Swedish data. One could argue that the methodology of Sweden in 2000 and 2005 (1998 and 2003 birth years) was incomplete, but then it should be removed in 2003 too, but this is not done. If one is to make that kind of selection of which data to include, some description of the reasoning should be included, but none is.

| Table 1 (Deisher et al.) raw data | Math based on Table 1 (Deisher et al.) | Figure 1 (Deisher et al.) | ||||

| Norway | UK | Sweden | mean of previous | weighted* mean of previous | shown in graph (estimate) | |

| 1995 | 0.274 | 0.274 | 0.274 | 0.28 | ||

| 1996 | 0.219 | 0.219 | 0.219 | 0.22 | ||

| 1997 | ||||||

| 1998 | 0.265 | 0.12 | 0.1925 | 0.246 | 0.27 | |

| 1999 | 0.14 | 0.11 | 0.125 | 0.112 | 0.12 | |

| 2000 | 0.05 | 0.061 | 0.0555 | 0.060 | 0.05 | |

| 2001 | 0.17 | 0.082 | 0.126 | 0.088 | 0.12 | |

| 2002 | 0.26 | 0.26 | 0.260 | (no data given) | ||

| 2003 | 0.29 | 0.038 | 0.164 | 0.123 | 0.17 | |

| 2004 | ||||||

| 2005 | ||||||

| 2006 | ||||||

| 2007 | 0.52 | 0.52 | 0.520 | 0.52 | ||

(* Weight is based on 2002 populations of these three nations according to the European Commission: Norway was 4,524,100, the UK was 58,921,500, and Sweden was 8,909,100. This may not be precise for other years but should be close enough to show where Deisher et al. failed to weigh numbers when getting the mean.)

Finally, Deisher et al. selectively removed the 2002 datapoint that does not match her narrative: data for 2002 was published in Table 1 but excluded from the graph in Figure 1. Despite listing data in Table 1 for 2002, Deisher et al. put nothing in the graph for that year. This makes no sense unless they want to argue that Norway’s data is not good on its own without at least one of the other two nations, but they do not do that. These two changes, especially 2002, change the shape of the graph in ways that go against the thesis of Deisher et al.: the different curves are shown in Figure 9.

Conclusion to Part 2

This part has sought to check the data in Deisher et al. 2015 and determine if the data matches the claimed sources and is used honestly and fairly.

In Table 1 of Deisher et al., all three sources from Norway showed substantially higher numbers than Deisher et al. They grabbed particular subtypes of autism but switched which subtype partway through and claimed they were talking about autism as a whole when the data was a subtype. In the UK data, Deisher et al. claim to get data from two sources, but more than half of the data was never even attempted in either source, and her numbers cannot be drawn from existing data in those two sources as there is simply no annualized data for those years. For Sweden, Deisher et al. claim to get data from one source, which they take the raw data as equal for the main data point showing a growing autism prevalence once MMR is more widely used again. However, this study of Swedish autism rates indicates that the methodology changed in the last year – from (prior two years studied examined in the study) simply recording how many 2-year-olds got an autism diagnosis when it had to be sought out to (last year examined in the study) screening all 2-year-olds – which anyone with even a passing familiarity with autism would know would result in a substantially higher number than the prior two years year based on methodology alone, and the study notes as much explicitly. Yet no account of this is made by Deisher et al.

Table 2 of Deisher et al. provides no sources, no breakdown by country for the data on MMR coverage, and no methodology on how the data from various countries was combined.

Even with bad data in Tables 1 & 2, the graph in Figure 1 of Deisher et al. has several additional issues. First, an unweighted mean is used despite substantially different populations in the three countries and without explaining anything about why this methodology or these countries. Second, it fails to have a zeroed Y-axis for MMR coverage, so the change seems substantially more dramatic. Zeroing this axis takes away any plausibility of causation for the data. Third, a few data points included in Table 1 are skipped, which do not align with the thesis of Deisher et al. 2015.

Ultimately, the use of data from other sources in Tables 1 & 2 and subsequently in Figure 1 of Deisher et al. 2015 is so significant that the paper, or at least these elements of it, should be retracted. It should also be investigated to see if there was direct fraud.

Part 3: The Need to Retract Pieces Like This

The data in part 2 was sent to the editors of Issues in Law & Medicine, asking for a retraction of Deisher et al. (2015) in early 2023. It was submitted as an article for peer review as that seemed to be the only way to submit it. The response was, “It does not meet our scholarly requirements, and too much time has passed since the publication of Dr. Deisher’s article.”

There were a few minor issues with scholarship, like a slight mix of citation styles and use of first person (my fault), but nothing substantially lacking. The bigger question is why a journal would ignore a clearly inaccurate article, and not issue a retraction simply because it was eight years old. This seems at odds with the general practice as retractions in scientific literature “often relate to papers that have long been published.” (Pulverer 2015) The growth in retractions seems to relate to increased awareness of scientific misconduct. However, it is likely not nearly sufficient as around 1-2% of scientists admit to having fabricated, falsified or modified data or results at least once in surveys but despite growth in the decade from 2001-2011, retractions only touched about 0.02% of published articles in 2010/2011 (Van Noorden 2011), so there are plenty of published papers with false data that are yet to be retracted.

Journals may have different standards for retracts and may need to be pushed to retract (Van Noorden 2011), but setting some undefined date after which no retract would be offered is not a reasonable standard. This is especially important on lower impact journals like Issues in Law & Medicine (impact factor of 0.36 or 0.263 according to SCI Journal) where someone may not have dug deep into the results immediately.

In fact, even though these errors were easy to find if someone looked in the right place, and did not take long to find, evidently nobody checked the claimed sources & compared them to results. Retractions already take an extended time for simple things and work at a glacial pace. The incentives for editors are already towards publishing more and retracting less. (Besançon 2022) Putting some arbitrary time cap will only further entrench this issue of a lot of peer-reviewed research still up that should really be retracted.

In fact, this is contrary to general practice at more reputable journals. In 2022-2023, Marc Tessier-Lavigne was under fire for five studies he co-authored in 1999-2009, which led to his resignation as president of Stanford. All five are further out than this eight-year gap between publication and someone pointing out the issues of the original study.

Conclusion

In conclusion, Issues in Law & Medicine should retract Deisher et al. 2015. However, this should not be the end; there needs to be a better policy at smaller journals for when substantial errors are found in a paper years after publication, as nobody has examined that aspect in detail until then. This is particularly important on issues where public policy and/or health are concerned. Bad retraction policy can cause issues when it goes outside the academic sphere to places where the same rigor is not always applied. For example, while this paper has only been cited thrice in academic literature, it has an Altmetric score of 1392, which makes it considered top research by that standard. This paper remaining unretracted gives the public a misperception of reality. There needs to be some prestige bonus or similar for retracting papers like this to help the public be less confused. The scientific community needs to give journals proper credit when they retract things and not just blame them for the decisions of prior editors.

Also, two things should happen with Deisher. First, people need to look deeper into her research here and elsewhere to determine what the causes were (fraud, gross mismanagement of data, etc.), and based on the analysis of people more skilled in those research areas, possible sanctions on her capacity to research might be put in place. I cannot prove fraud here, but either that or the degree of mishandling and misrepresenting data here is serious. Second, places need to stop calling her a vaccine expert. I get that those from a Catholic or Catholic-adjacent perspective are encouraged by her desire to make vaccines without fetal cell lines. I agree with you on that end. However, we cannot use bad or fraudulent science to do so. All explanations I can think of for the errors noted in part 2 make Deisher out to be a scientist who should not be trusted from the scientific perspective. Catholics, let’s support good science as the Church has repeatedly done throughout history.

Let’s hope this ends the saga of Deisher’s various claims about vaccines and autism, which have caused multiple people to be disturbed.

References

Besançon, L., Bik, E., Heathers, J. and Meyerowitz-Katz, G. (2022). Correction of scientific literature: Too little, too late! PLOS Biology, 20(3), p.e3001572. doi:https://doi.org/10.1371/journal.pbio.3001572.

Deisher, T.A., Doan, N.V., Koyama, K. and Bwabye, S. (2015). Epidemiologic and Molecular Relationship Between Vaccine Manufacture and Autism Spectrum Disorder Prevalence. Issues in Law & Medicine, 30(1), pp.47–70.

Gorski, D (2015). More horrible antivaccine ‘science’ from Theresa Deisher. [online] RESPECTFUL INSOLENCE. Available at: https://www.respectfulinsolence.com/2015/08/24/more-horrible-antivaccine-science-from-theresa-deisher/ [Accessed 1 Nov. 2023].

Isaksen, J., Diseth, T.H., Schjølberg, S. and Skjeldal, O.H. (2012). Observed prevalence of autism spectrum disorders in two Norwegian counties. European Journal of Paediatric Neurology, 16(6), pp.592–598. doi:https://doi.org/10.1016/j.ejpn.2012.01.014.

Latif, A.H.A. and Williams, W.R. (2007). Diagnostic trends in autistic spectrum disorders in the South Wales valleys. Autism, 11(6), pp.479–487. doi:https://doi.org/10.1177/1362361307083256.

Lingam, R. (2003). Prevalence of autism and parentally reported triggers in a north east London population. Archives of Disease in Childhood, 88(8), pp.666–670. doi:https://doi.org/10.1136/adc.88.8.666.

Nygren, G., Cederlund, M., Sandberg, E., Gillstedt, F., Arvidsson, T., Carina Gillberg, I., Westman Andersson, G. and Gillberg, C. (2011). The Prevalence of Autism Spectrum Disorders in Toddlers: A Population Study of 2-Year-Old Swedish Children. Journal of Autism and Developmental Disorders, 42(7), pp.1491–1497. doi:https://doi.org/10.1007/s10803-011-1391-x.

Pulverer, B. (2015). When things go wrong: correcting the scientific record. The EMBO Journal, 34(20), pp.2483–2485. doi:https://doi.org/10.15252/embj.201570080.

Surén, P., Bakken, I.J., Aase, H., Chin, R., Gunnes, N., Lie, K.K., Magnus, P., Reichborn-Kjennerud, T., Schjølberg, S., Øyen, A.-S. and Stoltenberg, C. (2012). Autism Spectrum Disorder, ADHD, Epilepsy, and Cerebral Palsy in Norwegian Children. Pediatrics, 130(1), pp.e152–e158. doi:https://doi.org/10.1542/peds.2011-3217.

Surén, P., Stoltenberg, C., Bresnahan, M., Hirtz, D., Lie, K.K., Lipkin, W.I., Magnus, P., Reichborn-Kjennerud, T., Schjølberg, S., Susser, E., Øyen, A.-S., Li, L. and Hornig, M. (2013). Early Growth Patterns in Children with Autism. Epidemiology, 24(5), pp.660–670. doi:https://doi.org/10.1097/ede.0b013e31829e1d45.

Van Noorden, R. (2011). Science publishing: The trouble with retractions. Nature, 478(7367), pp.26–28. doi:https://doi.org/10.1038/478026a.

No on cares what you say because you are literally mentally retarded, a liar and fraud, and you promote a deadly bioweapon as morally obligatory.

How many untruths can you fit in one line?

“literally mentally retarded” – I did a doctoral degree, not usually possible if that is true

“a liar and fraud” – I’ve surely lied a few times but I strive to avoid it and don’t do so often

“you promote a deadly bioweapon as morally obligatory” – I have never promoted any bioweapon. If you refer to COVID vaccines, which I think you do, they are clearly good not something to fear.

You sir, are DEAD wrong scientifically, politically and worst of all, religiously. We all know how you tried to refute Dr Deisher’s work as though you are some sort of qualiffied scientist, which of course, you are not! And it appears you are also a shocking portrayal of a priest who tried to refute Dr. Deisher’s work from a scientific perspective and failed miserably since you have ZERO credibility AND in fact, your theories have been widely discredited by science experts, while Dr. Deisher’s work is strongly supported. You sir, are both a disgrace to proven science – which soundly refused to agree with you and refused to print your nonsense and a greater disgrace to the priesthood. Shame on you and God have mercy on your soul.

You make a lot of claims but do not provide any evidence.

Your first claim is that I am wrong. Please point out any errors I made so I can correct them. Did I get a number slightly wrong somewhere or did I miss a source in one of those papers on autism rates?

Your second claim is that my theories (vaccines are morally good & do not cause autism) have been proven wrong while Deisher’s theory that fetal DNA in vaccines causes autism has been proven right. On both the overall safety & effectiveness of vaccines, & that they don’t cause autism, I have seen many well done peer-reviewed studies and a few dozen more serious scientific sources agreeing. I have yet to see one scientifically convincing argument to the contrary.

Finally, you claim I am not a qualified scientist. I am reasonably good in this but one cannot be an expert in everything. I do note my limitations when speaking in Gorski’s refutation that the latter part about the Deisher’s paper with DNA and cells. Anyone with one class of college stats (& likely even less) looking at the numbers between the papers listing autism rates can see Deisher is not accurate in reporting them. My expertise is primarily moral theology so I am an expert on truth telling and I really wonder if you are open to it given the way you write so stridently contrary to available evidence.

Nonetheless, if you have evidence, please share. I am open to being proven wrong with evidence. The claims in your comment are completely devoid of evidence.

Thank you for this extensive article! It’s so sad that Catholics keep repeating these unproven, unfounded hypotheses.

If you want to comment, please remember truth and charity. Don’t accuse someone in a heterosexual marriage of being homosexual without evidence, and if you want to argue vaccines cause autism, please give evidence not just say something like “everyone knows,” when in fact the majority of us know this is not the case. (I deleted a proposed comment that had both these issues.)